Newsletter – WP 5.3. OLEUM Databank

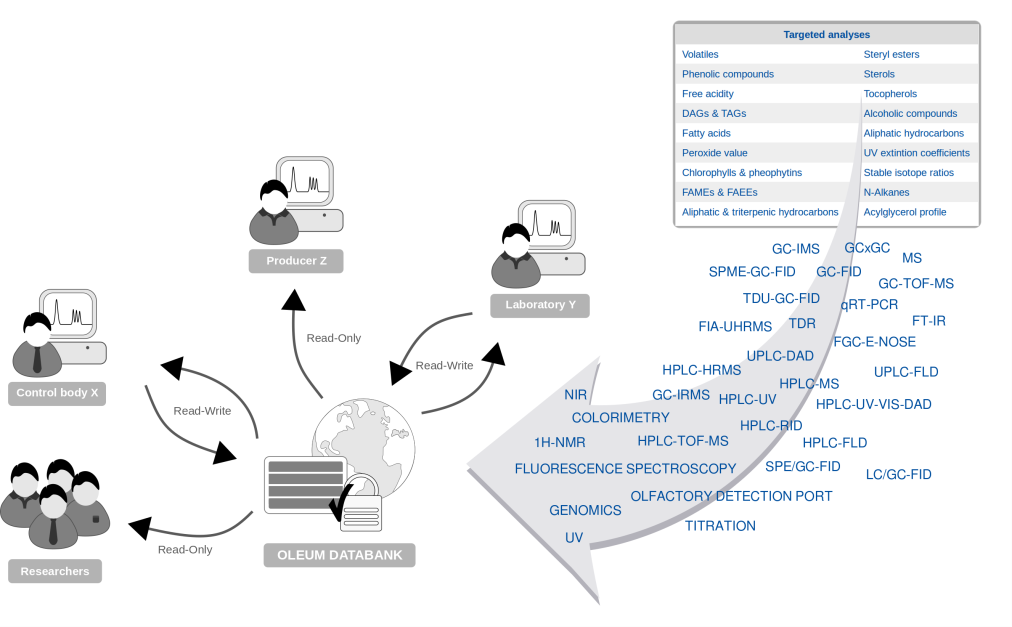

In times of increasing competition due to globalization and today's growing customer awareness for food quality, food control bodies worldwide are facing the major challenge of ensuring food safety, authenticity and quality. As part of the EU H2020 OLEUM project not only a large number of established and new analytical techniques are being scrutinized in order to deliver harmonized analytical protocols. Also, a database, the OLEUM Databank, is being implemented to eventually provide the European control bodies with a shared platform holding analytical data together with relevant additional information (metadata) on reference oils analysed as part of the project. The availability of this data will permit a more effective collaboration and proficiency of the authorized quality control laboratories in Europe and a better global harmonization. The OLEUM Databank will allow facilitating the cross-experiment comparison, sharing anchor results, calibration curves or even spectra or chromatograms. Apart from questions regarding the architecture of the databank, a major challenge is to cope with heterogeneous analytical data. Not only shall the databank allow the upload of analytical measurements but also to review these, even if the proprietary vendor software which acquired the data is not available to the reviewing person. More than 20 different techniques will result in measurements saved in 20+ different data formats and the number of data formats may even increase due to the fact that in most cases different vendors are offering measurement devices for the same analytical technique.

Image 1: Heterogeneous analytical data will be uploaded to OLEUM databank and shared between stakeholders

The initial work pursued within task 5.3 was to gather example files of raw measurement data from the project partners involved in analytical tasks. Most of these files are not in any open format but rather in the proprietary format associated with the vendor of the instrument used to acquire the data. Some of those files could already be read by the software OpenChrom, but a considerable part of the files had to be decoded in order to make them accessible. Approx. 70% of the supplied files can be fully accessed by now and 80% can at least be read to some extent. The decoding of the formats for interoperability purposes is an ongoing task.

Since September 2018 the beta version of the OLEUM Databank is online and evaluated internally by a few partners. It is planned to give access to the database to all project partners in January for a broad evaluation.

All software components implemented in the database were selected on the basis of being open source and the best choice to suit the purpose. For the beta version of the database the focus of the currently implemented user interface has been on the process of building up and managing the data which is made available, so that the project partners have the highest possible flexibility to enter the heterogeneous metadata associated with the reference samples and each of the various analytical procedure. Keeping in mind that the database will only be useful, if all needed information is provided along with any analytical results, there are currently three sections for data management:

- Manage Samples: Here users add the metadata of authentic and other reference samples which have been analysed by any technique and whose results/raw data is going to be provided. The entry fields are pre-set with some being mandatory and others optional.

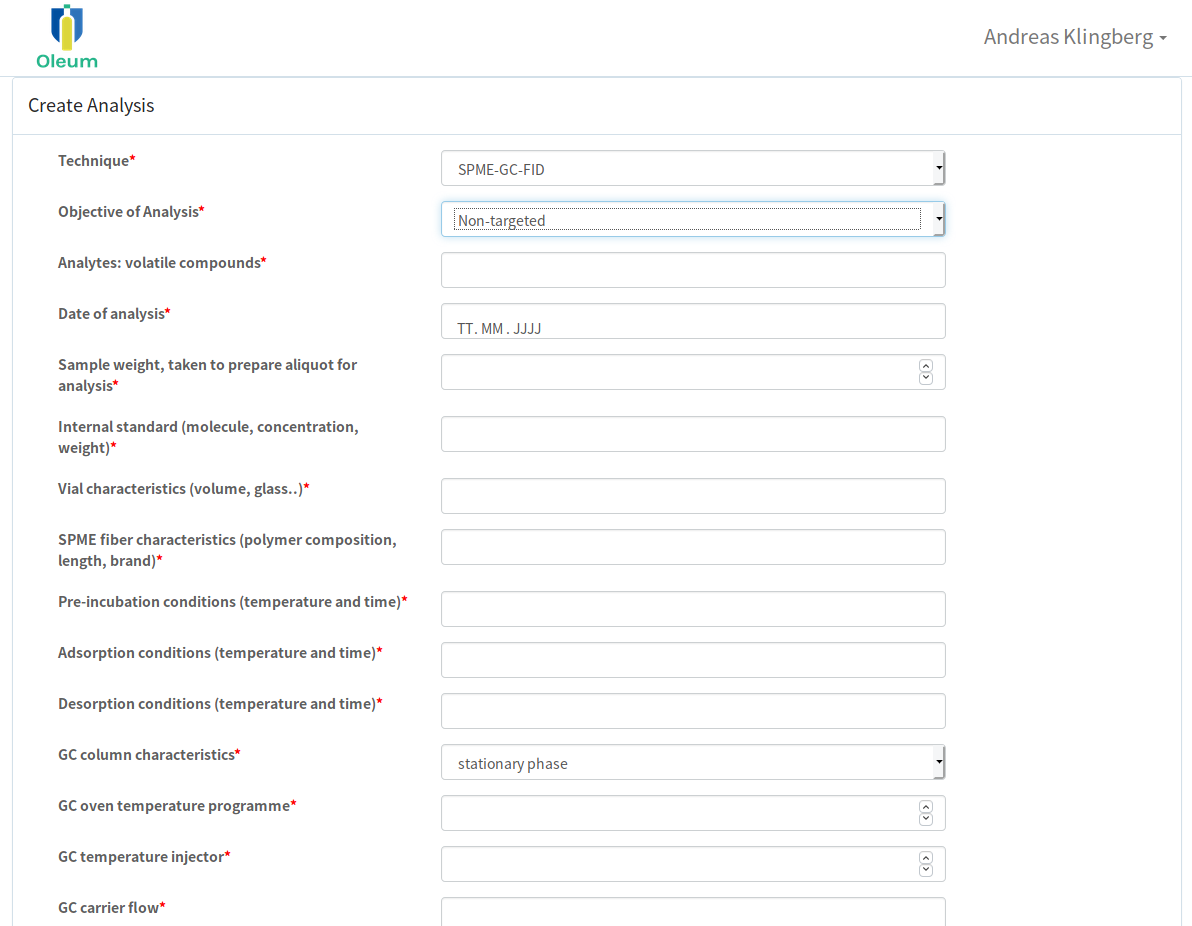

- Manage Techniques: this section is meant to build up all necessary metadata fields needed for each relevant analytical technique. Since the metadata of the various techniques is extremely diverse, the fields have not been pre-set yet. This allows building query forms which are tailored to collect the necessary metadata for each measurement data provided.

Image 2: Manage Analysis – Example of Interface to enter metadata for SPME-GC-FID measurement

- Manage Analysis: this section is for the actual upload of raw data, results, etc. But additionally the data provider has to provide the metadata on the analysis. But this is only possible if the according query form of the applied analytical technique has been made available under the section "Manage Techniques".

In addition some focus has been put on the user rights management. It allows a fine adjustment of the access rights given to each user by the administrator. At first glance it seems sufficient to merely have three different user levels with fixed user rights, “administrators” – having full access to all levels, “data providers” – having access to all features allowing the upload of data and “registered users” - being only able to review the data held in the database. But at the current stage of the project it is still not completely clear which rights the “data provider” and the basic “registered user” should be given or if there should also be limited access for unregistered users.

The biggest current challenge in the database development is to get the data upload function working smoothly with data folders as large as 2 to 4 GB which is the case with data generated e.g. by a GC-QTOF-MS device. Though, this will certainly remain to be highly dependent on the quality and speed of the internet connection and the uploading computer.

The interface for database search implemented at this stage is still basic. On basis of the beta version’s review by all project partners the implementation of database search and review capabilities will be the primary focus in the upcoming months.